Extracting Files from Appsense Environment Manager Database

We recently experienced some data loss issues with our Appsense Environment Manager installation. Long story short, a change we made to our application groups ended up deleting the user data associated with a particular application group.

What is Appsense Environment Manager, you ask? It’s a desktop personalization platform, which consists of an agent running on the client and a database to go with it. The agent captures user specific data from registry and file system and stores it in an SQL database. The captured data can then be roamed to other clients where the agent is running. It’s your roaming profile, on steroids.

Back to the story…

After working through the initial morning-after panic, my colleague reached out to Appsense for some assistance. They advised us to get a hold of the database backup from before the change was made and do a full restore. However, by this time, users had been logging on to their machines and generating data in the production database. A full restore was not an option. We needed to selectively restore files. However, restoring files one by one, for each user, from Environment Manager Console was going to be a big hassle.

Our DBAs were kind enough to mount the backup database under a different name so that both the backup and production databases were available for us simultaneously. With that in place and nothing else to lose, we started investigating the database.

Locating the Data

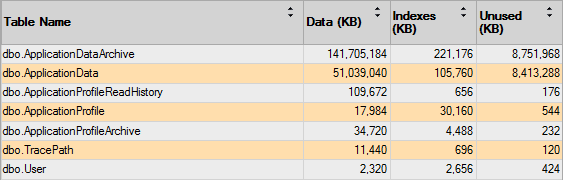

First task was to figure out where the data was located within the database. Since we knew that the actual data is stored within the database, finding the right tables was only a matter of running the Disk Usage by Top Tables report from SQL Server Management Studio.

This report is accessible by right clicking on the database, choosing Reports , then Standard Reports and then Disk Usage by Top Tables.

The report spat out something like this:

We decided to start with dbo.ApplicationData table, instead of archive. From there, we traced some of the foreign key relationships to arrive at the conclusion that we needed the following tables and columns.

dbo.User

This table holds all user information. We just needed the data from the following columns for our purposes.

1

2

UserPK -- primary key

LoginName -- username

dbo.ApplicationData

This table contains the actual data. The columns of interest here are:

1

2

3

4

5

6

7

ApplicationProfileFK -- FK to ApplicationProfile

Filename -- Name of the file

RelativePath -- Path of the file.

Data -- Actual data, data type is Image

Size -- actual size of the file

ModifiedLow -- Last Modified date

ModifiedHigh -- Last Modified data

dbo.ApplicationProfile

This table establishes relationships between users and their stored files. We only need the following columns from this table:

1

2

ApplicationProfilePK -- Primary Key

UserFK -- Foreign Key to User table.</pre>

Final Query

Based on the table structure discovered above, we were able to construct the following query to extract file data and relevant details. This query expects the user’s login name, relative path and name of the file you are looking for.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

SELECT

AD.Data,

AD.Size,

DataLength(AD.Data) As StoredSize,

AD.RelativePath,

AD.ModifiedLow,

AD.ModifiedHigh

FROM

ApplicationData As AD

JOIN

ApplicationProfile As AP ON AD.ApplicationProfileFK = AP.ApplicationProfilePK

JOIN

[User] As Usr ON AP.UserFK = Usr.UserPK

WHERE

User.Loginname = '' AND AD.RelativePath like '%%' AD.[Filename] = ''

C# for the rest

Once we knew the data source, all that was left was to come up with a script or program to extract specific files for specific users. We put together the following C# function to accomplish the rest.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

public static void ExportDataToFile(string username, string path, string filename, string destinationRoot)

{

try

{

// the extracted file will be saved in [destinationRoot]\[username] folder

string destination = Path.Combine(destinationRoot, username);

string saveFilename = Path.Combine(destination, filename);

// if the [destinationRoot]\[username] folder does not exist, create it.

if (!Directory.Exists(destination)) { Directory.CreateDirectory(destination); }

using (var sqlconnection = new SqlConnection("...Your Connection String..."))

{

// open SQL connection.

sqlconnection.Open();

var query = "SELECT AD.Data, AD.Size,DataLength(AD.Data) As StoredSize, "

+ "AD.RelativePath, AD.ModifiedLow, AD.ModifiedHigh "

+ "FROM ApplicationData As AD JOIN ApplicationProfile As AP "

+ "ON AD.ApplicationProfileFK = AP.ApplicationProfilePK "

+ "JOIN [User] As Usr ON AP.UserFK = Usr.UserPK "

+ "WHERE Usr.Loginname = @Loginname "

+ "AND AD.RelativePath like @Path "

+ "AND AD.[Filename] = @Filename ";

// construct SqlCommand using the query above.

var selectCommand = new SqlCommand(query, sqlconnection);

selectCommand.Parameters.AddWithValue("@Loginname", username);

selectCommand.Parameters.AddWithValue("@Path", "%" + path + "%");

selectCommand.Parameters.AddWithValue("@Filename", filename);

// execute it.

var reader = selectCommand.ExecuteReader();

// did we get anything back?

if (reader.Read())

{

byte[] data = (byte[])reader["Data"];

// extract size and stored size

int size, storedsize;

int.TryParse(reader["Size"].ToString(), out size);

int.TryParse(reader["StoredSize"].ToString(), out storedsize);

// extract last modified date information.

long low, high, total;

long.TryParse(reader["ModifiedLow"].ToString(), out low);

long.TryParse(reader["ModifiedHigh"].ToString(), out high);

total = low + (high << 32);

DateTime ft = DateTime.FromFileTime(total);

// if the storedsize value is smaller than size value, the data is most likely zipped

// handle accordingly.

if (size > storedsize)

{

using (var memstream = new MemoryStream(data))

{

using (var gzstream = new GZipStream(memstream, CompressionMode.Decompress))

{

using (var fs = new FileStream(saveFilename, FileMode.Create, FileAccess.Write))

{

var breader = new BinaryReader(gzstream);

var bwriter = new BinaryWriter(fs);

var numArray = new byte[2048];

for (var i = breader.Read(numArray, 0, (int)numArray.Length); i > 0; i = breader.Read(numArray, 0, (int)numArray.Length))

{

bwriter.Write(numArray, 0, i);

}

bwriter.Close();

fs.Close();

}

}

}

// Set newly created file's last write time to what we pulled from the database.

File.SetLastWriteTime(saveFilename, ft);

}

// data is probably not compressed. write it out.

else

{

using (var fs = new FileStream(saveFilename, FileMode.Create, FileAccess.Write))

{

var bwriter = new BinaryWriter(fs);

bwriter.Write(data);

bwriter.Close();

fs.Close();

}

// set newly created file's last write time to what we pulled from the database.

File.SetLastWriteTime(saveFilename, ft);

}

}

}

}

catch (Exception ex)

{

// basic, handle exceptions as you wish.

Console.WriteLine(ex);

}

}

For example, to extract a user’s default signature file, this function can be called in the following fashion:

1

ExtractDataToFile("testuser", "Microsoft\\Signatures", "Default.htm", "C:\ExtractedData");

This will extract {CSIDL_APPDATA}\Microsoft\Signatures\Default.htm for testuser and write it to C:\ExtractedData\testuser\Default.htm

Last Modified Date

ApplicationData table within Environment Manager’s database contains two date columns, ModifiedLow and ModifiedHigh. After some research, we were able to figure out that these correspond to ftLastWriteTime for WIN32_FILE_ATTRIBUTE_DATA Structure, which is a FILETIME structure.

The following C# code can take the ModifiedLow and ModifiedHigh values and convert them into easily usable DateTime value.

1

2

3

4

5

6

7

8

9

// extract last modified date information.

long low, high, total;

long.TryParse(reader["ModifiedLow"].ToString(), out low);

long.TryParse(reader["ModifiedHigh"].ToString(), out high);

total = low + (high << 32);

DateTime ft = DateTime.FromFileTime(total);

// set a file's last write time to the newly calculated DateTime value.

File.SetLastWriteTime(saveFilename, ft);

And that’s how we recovered data selectively from Environment Manager database. Leave a comment below if you end up using this information and don’t forget to thank your DBAs. 🙂